GTFS Data vs. Service Quality

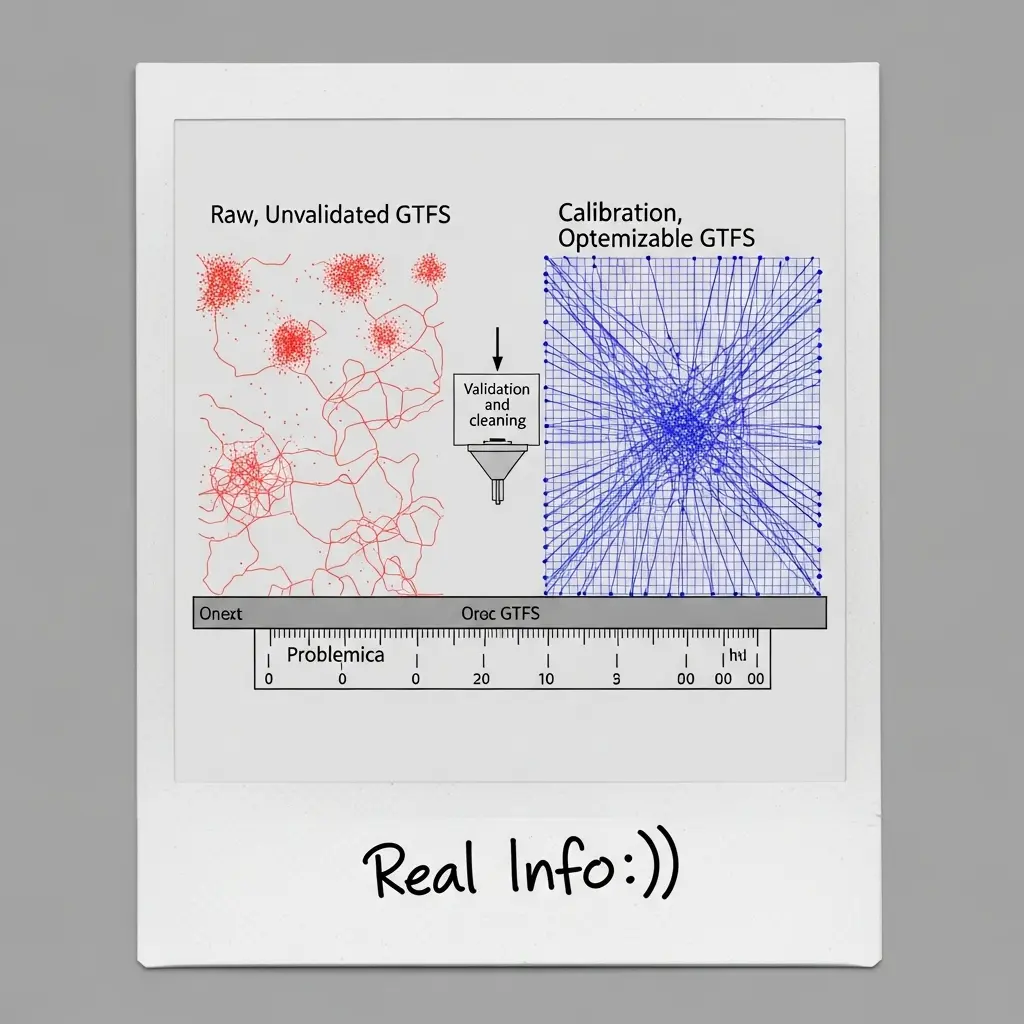

Every public transport system runs on two essential fuels: the diesel or electricity that powers its vehicles, and the data that powers its decisions. While the first is meticulously measured and managed, the second—specifically your GTFS data—often operates in the shadows. The quality of this data, however, is the invisible foundation upon which every optimization effort is built. Get it wrong, and even the most sophisticated algorithms are steering in the dark.

General Transit Feed Specification (GTFS) is more than just a digital schedule; it is the language your scheduling software uses to understand your network. But what happens when this language is full of errors, omissions, or outdated phrases? The consequences are not merely technical; they ripple out to the platform, the passenger, and your bottom line.

The Problem: The Hidden Cost of "Good Enough" Data

For transit planners, the pain point is insidious. You invest in advanced optimization tools to boost efficiency, yet the promised results feel just out of reach. The culprit is often not the tool itself, but the flawed data it's being fed. Poor GTFS quality creates a silent drain on performance:

-

The Efficiency Mirage: Your optimization engine calculates the "perfect" vehicle allocation, but it's based on incorrect travel times or stop sequences. The result? Theoretical savings that vanish in execution, as drivers confront the reality of inaccurate run times.

-

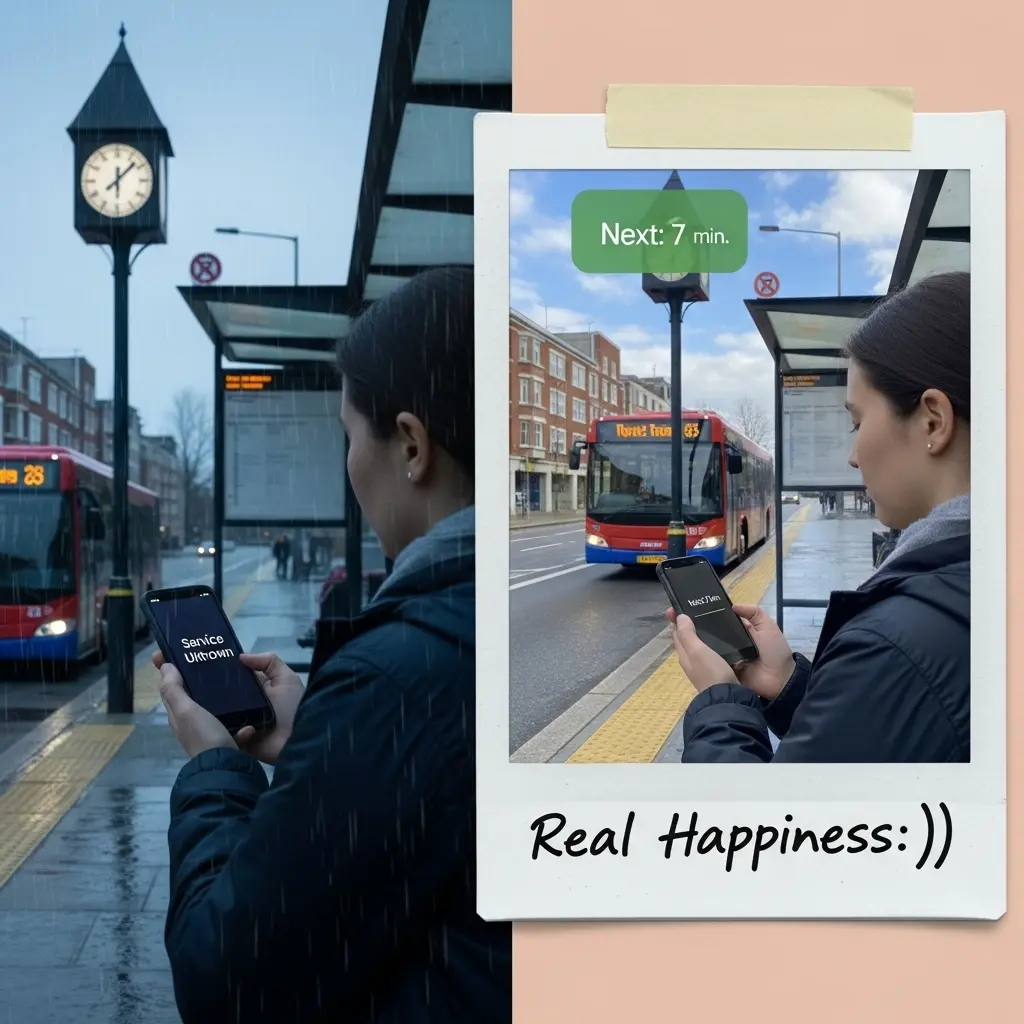

Erosion of Passenger Trust: When real-time arrival predictions constantly miss the mark due to faulty underlying schedule data, passenger frustration grows. Reliability isn't just about vehicle tracking; it starts with a correct foundational schedule.

-

Resource Misallocation: Poor data leads to poor decisions. You may be over-supplying underused routes or straining resources on busier ones because your demand models are based on inaccurate frequency or zone data.

-

Stifled Innovation: High-quality, reliable GTFS is the prerequisite for next-level services like dynamic scheduling, microtransit integration, or sophisticated multi-modal journey planners. "Dirty data" locks you out of the future.

Analysis: Data Quality as a Scientific Imperative, Not a IT Chore

Optimizing a network with flawed data is like calibrating a precision instrument with a warped ruler. The pursuit of operational excellence must begin with data integrity. This isn't just an IT task; it's a fundamental operational discipline.

Effective optimization requires a holistic, systems-level view. The GTFS feed is the digital twin of your physical operation. If this twin is inaccurate—if stop locations are imprecise, if timepoints are estimates rather than measurements—then any optimization model inherits those flaws. The algorithm's output is only as valid as its input.

A rigorous analysis of data quality must precede any advanced operational analysis. Distinguishing between network performance issues and data quality issues is the first critical step. For example, as explored in our GTFS Data Management use cases, the precise geospatial accuracy of stop locations directly impacts deadhead time calculations and, consequently, fleet utilization rates.

Relying on unchecked, manually assembled feeds traps you in a cycle of "garbage in, garbage out." The goal is to establish proactive data governance—treating schedule data as a living, validated asset, not a static file. This mirrors the philosophy behind our network planning optimizations, where high-fidelity data enables scenario modeling that reflects real-world possibilities, not just spreadsheet theories.

Conclusion: Quality Data is the Bedrock of Quality Service

The journey to a more efficient, responsive, and passenger-centric transit system begins long before an optimization engine runs. It begins with a commitment to data excellence. Superior GTFS quality transforms your data from a passive administrative record into an active strategic asset.

In an era where passengers expect app-based precision and agencies demand tangible ROI from tech investments, there is no room for foundational errors. By ensuring your GTFS data is accurate, complete, and meticulously maintained, you do more than improve schedules—you build trust, unlock true operational potential, and lay the groundwork for the agile transport network of tomorrow.

For a deeper operational perspective on structural waste in transit operations, also read our article on empty kilometers and dead miles.

Stop wondering why your optimization results don't match expectations. Start by examining the data that fuels them.

Related Posts

Beyond Routing: Why Static Scheduling is Killing Your Fleet Efficiency

Every morning, public transport planners look at maps that seem perfectly organized. But there is a silent observer that static plans fail to account for: the inherent volatility of urban life.

The Hidden Mathematics of 'Empty Kilometers' in Public Transport

Every morning, before a single passenger boards, a silent migration occurs in cities worldwide: thousands of buses travel from depots to starting points, completely empty. This "dead mileage" or "empty kilometers" is often dismissed as an unavoidable operational necessity. But in reality, it's one of public transport's most significant—and most overlooked—sources of waste.